I've been trying to replicate Hammer's automatic face alignment in Blender using Python. I'm looking to get it 1 to 1.

I know from Valve that Face Alignment works by projecting on to the face depending on its angle.

I've written some code that goes over every face in my object in Blender, and aligns a camera (which is at the world originof (0 0, 0,) to rotate towards it and then uv project on to it. To put it another way, I'm replicating Face Alignment by getting the angle of the face, using that angle to rotate a camera (so the camera's view is parallel to the surface) and then projects a uv from the camera view on to the surface.

This actually works perfectly... for objects that are flat on the grid. But all other angles don't work quite right.

My issue is not that the process doesn't work, just that there isn't information I can find on how Hammer handles these arbitrary angles.

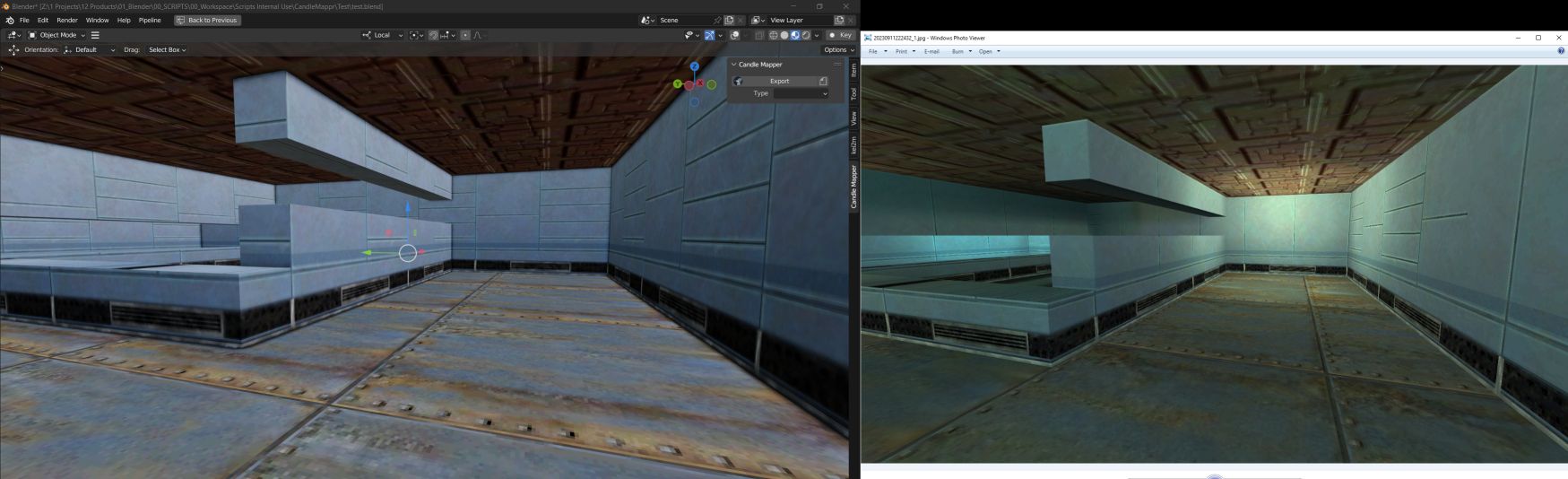

Left is Blender, right is Hammer. The textures are aligned 1 to 1. All the mesh is "flatly" on the grid.

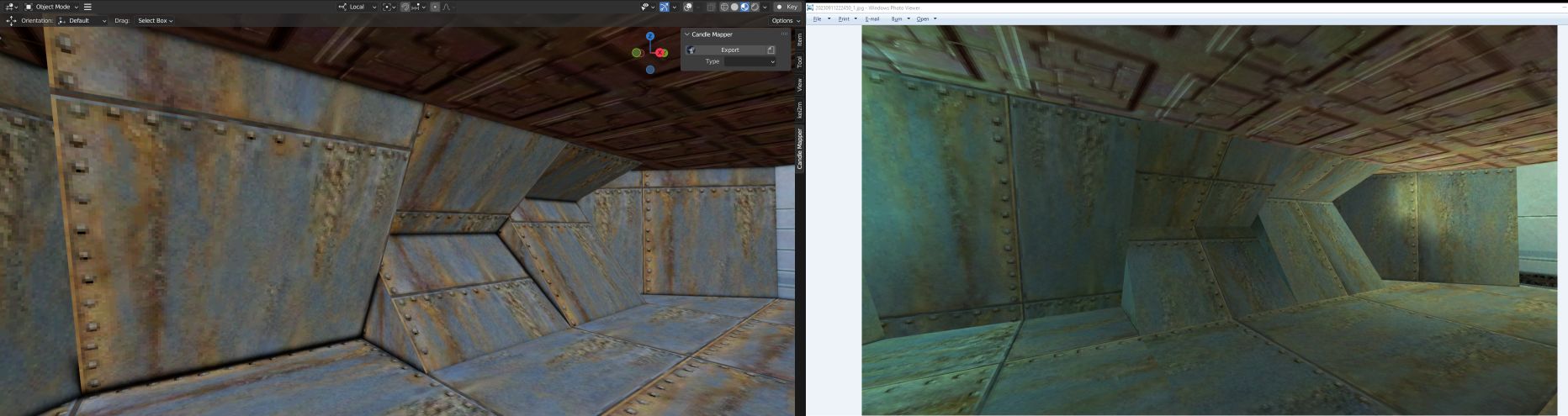

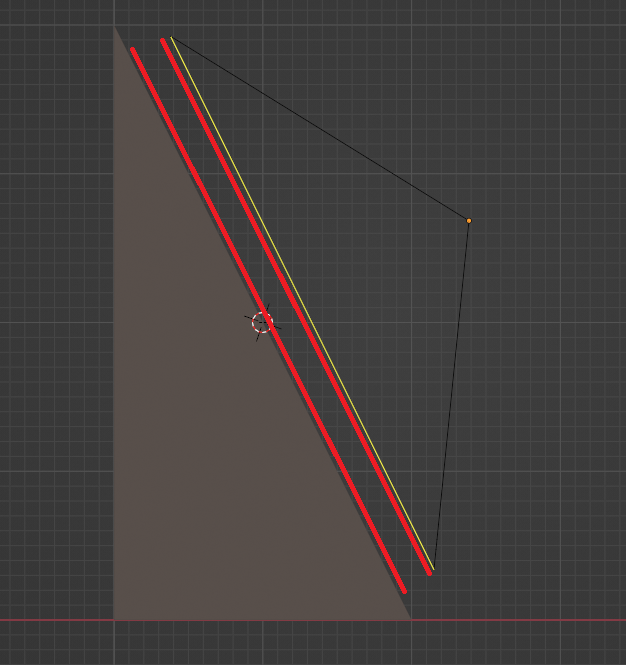

These objects are at an angle and aren't matched up.

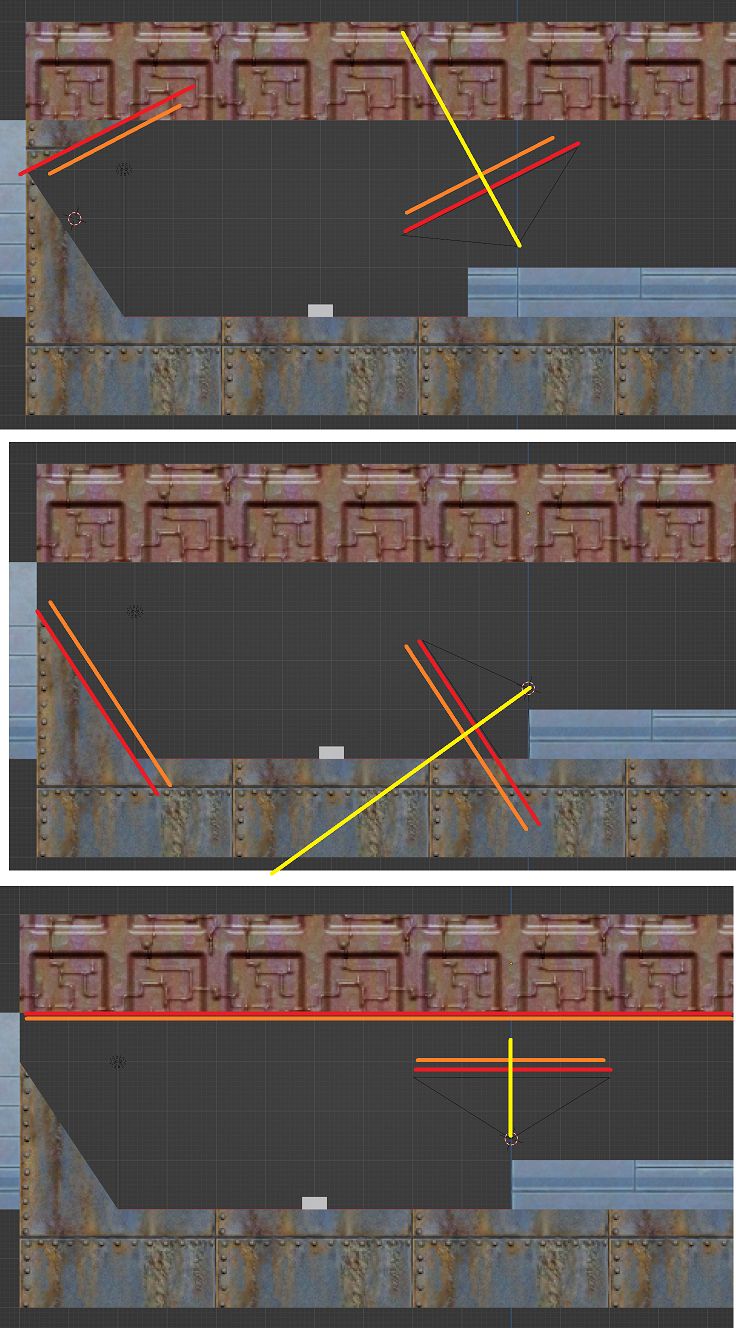

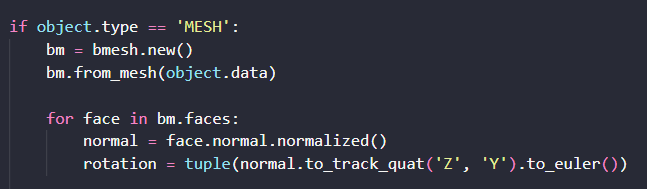

The following is how my script works:

The camera stays in the same location (the world origin) and rotates to match the angle of the face. It then projects the uv from the camera view. It does this for every face.

Hammer Face Alignment in Blender

Created 1 year ago2023-09-11 23:08:04 UTC by

Candle

Candle

Created 1 year ago2023-09-11 23:08:04 UTC by

![]() Candle

Candle

Posted 1 year ago2023-09-11 23:08:04 UTC

Post #347853

Posted 1 year ago2023-09-12 10:04:16 UTC

Post #347854

Have you tried, instead of projecting, to transform the face's world UV coordinates by the face's normal vector?

Essentially you want the UV coordinate plane to become aligned to the face's plane.

Essentially you want the UV coordinate plane to become aligned to the face's plane.

Posted 1 year ago2023-09-12 11:21:27 UTC

Post #347855

Blender doesn't exactly have a world UV coordinate system (far as I know), at least not like Hammer does. So the problem there is what to transform the face's UV from, since Blender doesn't give you a reliable starting place.

What I tried, and which does something similar I believe, is to move the camera in to a location that directly aims at the face, rather than staying in the world origin. That way it more closely replicates this behaviour. But it still isn't accurate... ...since even face projection still takes into account the world location. Maybe what you're suggesting is using this texture projection and offsetting from that based on the world location of the face?

What I tried, and which does something similar I believe, is to move the camera in to a location that directly aims at the face, rather than staying in the world origin. That way it more closely replicates this behaviour. But it still isn't accurate... ...since even face projection still takes into account the world location. Maybe what you're suggesting is using this texture projection and offsetting from that based on the world location of the face?

Posted 1 year ago2023-09-12 12:04:58 UTC

Post #347856

For the world planes, you can find a vertex's corresponding UV (assuming no scaling/shift/rotation) by projecting the vertex coordinates flatly onto the nearest world plane (e.g. XY for a upward facing face) divided by the texture's dimensions (since UV coords are relative to texture dimensions).

Problem is just figuring out which world plane to use (XY / XZ / YZ) which depends on the face angle.

Problem is just figuring out which world plane to use (XY / XZ / YZ) which depends on the face angle.

Posted 1 year ago2023-09-12 13:45:42 UTC

Post #347857

So what you're saying is

In those cases, even with the camera at the world origin, it treats the world UV as if it's at an angle. But with this new method, it aligns it with the world.

Still, when I transform the face by the normal, rotating it, won't that have the same result of having that rotated UV map anyway?

I've been doing Python in Blender for several years now but I'm out of my depth on this one. Never had to deal with normals and all this stuff before, not really sure how they work. Might just have to give up on this tbh, it's been a while I've been trying.

- I can reuse my existing code (see below) to determine the rotation of a face in world space.

- Then, I find which world plane (like XY, XZ, or YZ), I suppose by comparing the rotation values to find what it's closest to.

- Then project from the world origin on to those faces which aligns all of them to the world uv, even if they'll be stretched by the projection (so does this give me Hammer's 'World Alignment'?)

- Last, transform the face's world UV coordinates using the face's normal vector. I assume this involves simulating the rotation of the face in the UV space?

In those cases, even with the camera at the world origin, it treats the world UV as if it's at an angle. But with this new method, it aligns it with the world.

Still, when I transform the face by the normal, rotating it, won't that have the same result of having that rotated UV map anyway?

I've been doing Python in Blender for several years now but I'm out of my depth on this one. Never had to deal with normals and all this stuff before, not really sure how they work. Might just have to give up on this tbh, it's been a while I've been trying.

Posted 1 year ago2023-09-12 18:15:28 UTC

Post #347858

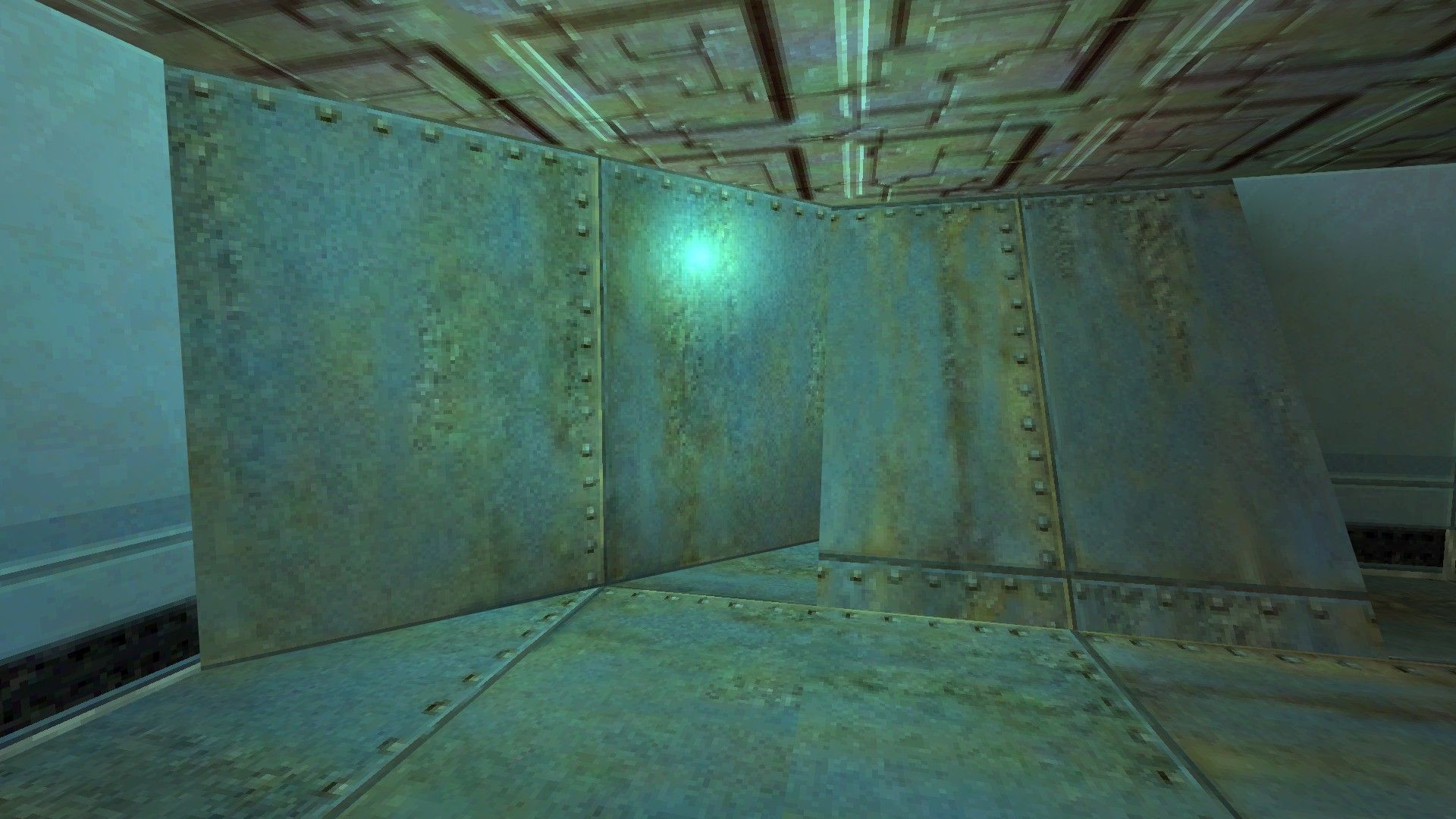

I think I figured out your suggestion and it seems to work doing it manually, I'm now gonna write a script to do it and see how it goes. Thank you for helping me out!

Posted 1 year ago2023-09-12 19:13:37 UTC

Post #347859

Great to hear it worked when done manually, hope the script works!

As for normal vectors, it's just a vector that stands perpendicular out from a plane, basically the plane's orientation (technically it's a vector standing perpendicular from a pair of vectors, but in this context a plane is just that pair of vectors).

Had to mess with this sort of stuff when making Map2Prop. 😄

As for normal vectors, it's just a vector that stands perpendicular out from a plane, basically the plane's orientation (technically it's a vector standing perpendicular from a pair of vectors, but in this context a plane is just that pair of vectors).

Had to mess with this sort of stuff when making Map2Prop. 😄

You must be logged in to post a response.