VERC: Real-Time Photorealism Last edited 2 years ago2022-09-29 07:55:26 UTC

You are viewing an older revision of this wiki page. The current revision may be more detailed and up-to-date.

Click here to see the current revision of this page.

You know you've come across an impressive rendering system when you find yourself wondering "is this really generated, because it looks a helluva lot like a photo". I, for one, have had that happen to me around... twice. In over two years of viewing the Flipcode image of the day, I have nearly mistaken a rendered image for a photo twice. That's an average of once a year, and goes to show that we're still a long way off from photorealistic rendering in the mainstream - not to mention doing so in real-time. This article will seek to find out just why we can't have photorealistic rendering in real-time, and just when we will be able to have it.

When dealing with computer graphics, we're focusing on one simple thing - light. An unimaginable amount of photons - tiny particles of light - are currently bouncing around the room you're sitting in and finding their way into your eye. The more photons, the more light; in a totally black room, there is a relatively small amount of photons bouncing around compared to a sunlit room. So why can't we do this on a PC? All we have to do is bounce around some virtual photons and see which ones smack into the camera - don't we?

It's not quite as simple as that. You see, when I say "an unimaginable amount", I really mean it - an amount so huge that the brain simply cannot comprehend it properly. To demonstrate the fact that the brain just can't think about the size of large numbers properly, here's a little off-topic exercise for you which I once read a professor gave to his students. Here's a number line:

Where were we? Ah, yes; the number of photons. The problem is that the number of photons is simply too many for any computer of the present day to be able to cope with. We just can't simulate the universe's light methods at any reasonable amount of speed (indeed, if we tried, we'd probably still be waiting for it to finish one frame in a few hundred years). So, we use shortcuts. And lots of them.

A bog-standard method of lighting some polygons is to simply calculate the amount of light that they reflect back to the 'eye' (the camera) from a 'point light' (an infinitely small point in space which radiates light in all directions) at each vertex of each polygon, then just interpolate this across the polygon to get the lighting at a specific pixel. This type of lighting is called 'diffuse' lighting, and is based on the assumption that surfaces scatter light in all directions (there's also 'specular' lighting, which deals with the light that gets reflected directly off the surface). Unfortunately, this per-vertex lighting (a) looks dreadful when the surface isn't very high resolution, polygons-wise, and (b) isn't realistic. No, sir, this is decidedly unrealistic.

Problem (a) can be conquered by using what's called phong shading (per-pixel calculations), but that still doesn't alleviate problem (b). You see, photons don't just go from the light source, to the object, to the eye, with nothing in between - they bounce around the room. If you have a totally dark room and you turn on a light, the shadows cast by objects aren't pitch black because light bounces off the walls and into the shadowed area, lightening them a bit. Couple that with the fact that light sources are never, ever one infinitely small point, and you've got a decidedly unrealistic scene.

The encapsulating term for illuminating a scene correctly, with light bouncing off surfaces to light other surfaces, is 'global illumination'. There are three major global illumination algorithms currently floating around - radiosity (which is what Half-life's RAD program uses), Monte-Carlo illumination, and photon mapping - with various other lesser-used ones applying to more specific situations. These are all very slow processes, and have to be applied beforehand, removing the possibility of correct dynamic per-frame lighting - a blow on real-time photorealism. Still, it can still be done with static scenes. Or not.

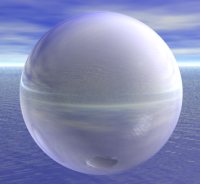

Sure, there's the problem of basic lighting, but that's not all we can see - reflections, refractions, shadows, you name it, we can see it. Reflections are currently being conquered by the use of a technique called 'environment mapping' - mapping a pre-defined environment onto an object (although 'cube environment mapping', one of the few environment mapping methods, allows dynamic generation of the reflections). However, environment mapping gives perfect reflections, with no flaws - which is, unfortunately, unlike lots of real-life objects. If you look at a fairly reflective object, you'll see that the reflections are not perfect - they're slightly blurred, depending on the material the object is made of. It's this dependancy on the object's material that strikes another blow to real-time photorealism.

Then there's refraction. If you can't remember it from your school physics lessons, refraction is the name given to light getting 'bent' as it goes through a transparent or translucent object (due to the speed of light being different inside the object rather than in the air). Again, environment mapping can be used to do refraction, but it's much more complicated and has the same caveats as reflection - the refraction is never perfect. We're really breaking real-time photorealism down, aren't we?"Ah, you can't go wrong with shadows, though, can you?" you say. Well, unfortunately, we're not doing too well there either. What with up-and-coming games such as Doom 3 boasting spiffy real-time shadows (using a technique commonly called 'stencil shadows' or 'projective shadows'), you'd think we're coming along fine in that area. However, if you take a look at a screenshot of a shadow from Doom 3, then look at your own shadow, you'll see one major difference - the game's shadows have very hard edges, whereas the real-life ones get more blurred the further they are from the object that caused them. 'Soft shadows', as they are known, look much better than 'hard shadows', and are much more realistic - but they're much more complex to achieve with any degree of speed or accuracy.As this article has shown, we're a long way from real-time photorealistic graphics - a hell of a long way. Indeed, I think it'll stay that way for a long time to come. Moore's Law says that computational power doubles every 18 months, so if we think that it takes a top-of-the-range non-real-time renderer about five hours to produce a near-photorealistic image on a 3 GHz PC, then that'll mean that in 18 months it'll take around 2.5 hours. That's a long time, and if you keep working it out, you'll find that it's quite a large number of years until we're down to sub-1-second times.

The techniques we're coming up with have come a long way, and still have a long way to go. Even so, the future of photorealistic computer graphics is looking bright, and with new hardware coming out from ATi and nVidia giving even more polygon-pushing power, we can look forward to huge leaps in technology.

Surprisingly close to 1 million, isn't it?

When dealing with computer graphics, we're focusing on one simple thing - light. An unimaginable amount of photons - tiny particles of light - are currently bouncing around the room you're sitting in and finding their way into your eye. The more photons, the more light; in a totally black room, there is a relatively small amount of photons bouncing around compared to a sunlit room. So why can't we do this on a PC? All we have to do is bounce around some virtual photons and see which ones smack into the camera - don't we?

It's not quite as simple as that. You see, when I say "an unimaginable amount", I really mean it - an amount so huge that the brain simply cannot comprehend it properly. To demonstrate the fact that the brain just can't think about the size of large numbers properly, here's a little off-topic exercise for you which I once read a professor gave to his students. Here's a number line:

|----------------------------------|

million trillionWhere were we? Ah, yes; the number of photons. The problem is that the number of photons is simply too many for any computer of the present day to be able to cope with. We just can't simulate the universe's light methods at any reasonable amount of speed (indeed, if we tried, we'd probably still be waiting for it to finish one frame in a few hundred years). So, we use shortcuts. And lots of them.

A bog-standard method of lighting some polygons is to simply calculate the amount of light that they reflect back to the 'eye' (the camera) from a 'point light' (an infinitely small point in space which radiates light in all directions) at each vertex of each polygon, then just interpolate this across the polygon to get the lighting at a specific pixel. This type of lighting is called 'diffuse' lighting, and is based on the assumption that surfaces scatter light in all directions (there's also 'specular' lighting, which deals with the light that gets reflected directly off the surface). Unfortunately, this per-vertex lighting (a) looks dreadful when the surface isn't very high resolution, polygons-wise, and (b) isn't realistic. No, sir, this is decidedly unrealistic.

Problem (a) can be conquered by using what's called phong shading (per-pixel calculations), but that still doesn't alleviate problem (b). You see, photons don't just go from the light source, to the object, to the eye, with nothing in between - they bounce around the room. If you have a totally dark room and you turn on a light, the shadows cast by objects aren't pitch black because light bounces off the walls and into the shadowed area, lightening them a bit. Couple that with the fact that light sources are never, ever one infinitely small point, and you've got a decidedly unrealistic scene.

The encapsulating term for illuminating a scene correctly, with light bouncing off surfaces to light other surfaces, is 'global illumination'. There are three major global illumination algorithms currently floating around - radiosity (which is what Half-life's RAD program uses), Monte-Carlo illumination, and photon mapping - with various other lesser-used ones applying to more specific situations. These are all very slow processes, and have to be applied beforehand, removing the possibility of correct dynamic per-frame lighting - a blow on real-time photorealism. Still, it can still be done with static scenes. Or not.

Sure, there's the problem of basic lighting, but that's not all we can see - reflections, refractions, shadows, you name it, we can see it. Reflections are currently being conquered by the use of a technique called 'environment mapping' - mapping a pre-defined environment onto an object (although 'cube environment mapping', one of the few environment mapping methods, allows dynamic generation of the reflections). However, environment mapping gives perfect reflections, with no flaws - which is, unfortunately, unlike lots of real-life objects. If you look at a fairly reflective object, you'll see that the reflections are not perfect - they're slightly blurred, depending on the material the object is made of. It's this dependancy on the object's material that strikes another blow to real-time photorealism.

Then there's refraction. If you can't remember it from your school physics lessons, refraction is the name given to light getting 'bent' as it goes through a transparent or translucent object (due to the speed of light being different inside the object rather than in the air). Again, environment mapping can be used to do refraction, but it's much more complicated and has the same caveats as reflection - the refraction is never perfect. We're really breaking real-time photorealism down, aren't we?"Ah, you can't go wrong with shadows, though, can you?" you say. Well, unfortunately, we're not doing too well there either. What with up-and-coming games such as Doom 3 boasting spiffy real-time shadows (using a technique commonly called 'stencil shadows' or 'projective shadows'), you'd think we're coming along fine in that area. However, if you take a look at a screenshot of a shadow from Doom 3, then look at your own shadow, you'll see one major difference - the game's shadows have very hard edges, whereas the real-life ones get more blurred the further they are from the object that caused them. 'Soft shadows', as they are known, look much better than 'hard shadows', and are much more realistic - but they're much more complex to achieve with any degree of speed or accuracy.As this article has shown, we're a long way from real-time photorealistic graphics - a hell of a long way. Indeed, I think it'll stay that way for a long time to come. Moore's Law says that computational power doubles every 18 months, so if we think that it takes a top-of-the-range non-real-time renderer about five hours to produce a near-photorealistic image on a 3 GHz PC, then that'll mean that in 18 months it'll take around 2.5 hours. That's a long time, and if you keep working it out, you'll find that it's quite a large number of years until we're down to sub-1-second times.

The techniques we're coming up with have come a long way, and still have a long way to go. Even so, the future of photorealistic computer graphics is looking bright, and with new hardware coming out from ATi and nVidia giving even more polygon-pushing power, we can look forward to huge leaps in technology.

Answer to earlier question

In answer to my earlier challenge, here's what you should've got (or thereabouts): |-|--------------------------------|

million trillionSurprisingly close to 1 million, isn't it?

- Categories

- Archived Articles

- VERC Archive

- VERC - general

- Article Credits

- Francis 'DeathWish' Woodhouse – Author

This article was originally published on Valve Editing Resource Collective (VERC).

The original URL of the article was http://collective.valve-erc.com/index.php?doc=1042230580-65380800.

The archived page is available here.

TWHL only publishes archived articles from defunct websites, or with permission.

For more information on TWHL's archiving efforts, please visit the

TWHL Archiving Project page.

Comments

You must log in to post a comment. You can login or register a new account.