Entity Programming - Introduction to Entities with Animated Models Last edited 2 years ago2022-01-04 19:00:18 UTC

You are viewing an older revision of this wiki page. The current revision may be more detailed and up-to-date.

Click here to see the current revision of this page.

Half-Life Programming

- Entity Programming - Overview

- Entity Programming - Writing New Entities

- Entity Programming - Timer Entity

- Entity Programming - Inheritance VS Duplication (Creating Simple Variants of Existing Entities)

- Entity Programming - Player Interaction

- Entity Programming - Handling Player Input

- Entity Programming - Introduction to Entities with Animated Models

- Entity Programming - Temporary Entity Effects

- Entity Programming - Save/restore

Opening words

Half-Life has a neat animation system, at least for 1998. It's got skeletal animation, which is a big step up from Quake's vertex animation, however, most people seem to stop there. There is a lot more to it though. For example, it is possible to rotate certain bones from code, which can be very useful for entities like security cameras, or robot arms. It is also possible to attach entities onto bones, and many more.We'll analyse it all in 3 aspects, gradually linking one to another:

- Conceptual - the concepts of animation in Half-Life

- Low-level - how we can directly control animation

- High-level - high-level wrappers for the low-level animation parameters

Concepts of animation in Half-Life

Obviously, you already know what animation is, and perhaps what a skeleton is. Half-Life models consist of bones that can change position and orientation depending on the current animation keyframe. In other words, they can be animated.These animations are defined with a name, length in keyframes and a framerate, at least for the purposes of a conceptual explanation. Also note that they're called "sequences" in Half-Life SDK.But also, Half-Life models consist of the following:

- Attachments - special types of bones that are used to easily attach things onto them

- Bodyparts/submodels - different variants of the model that can be chosen between (e.g. Barney's pistol)

- Bone controllers - special properties that can affect multiple bones and their properties like translation or rotation. They're defined by ID, type and range (for example, bone controller 0 changes the orientation on the Z axis between -180 and +180 degrees)

- Hitboxes - bone-aligned boxes that represent a simple collision mesh

- Hitbox groups - groups of boxes identified by a number (e.g. 0 = helmet, 1 = head, 2 = torso etc.)

- Events - special properties that are attached on a single animation frame, so when that animation plays and reaches that frame, a certain event is executed (such as a sound or a muzzle flash)

- Skins - texture variations of the model

Blends are basically special types of sequences that combine two subsequences with a blending mode, and their blending is controlled by code.

Low-level animation control

Now, the question is: how are these concepts concretely implemented?StudioModelRenderer.cpp on the clientside contains a lot of code about bone transformation, blending and controllers. It involves a lot of interpolation and quaternion maths, so much that it deserves its own article, so that won't be analysed here.In the context of entity programming, what we actually do is control the parameters which will be interpreted by the engine and the studio model renderer. These parameters are located in

entvars_t:

int sequence; // animation sequence

int gaitsequence; // movement animation sequence for player (0 for none)

float frame; // % playback position in animation sequences (0..255)

float animtime; // world time when frame was set

float framerate; // animation playback rate (-8x to 8x)

byte controller[4]; // bone controller setting (0..255)

byte blending[2]; // blending amount between sub-sequences (0..255)sequence is the animation ID. In a modern engine, you'd typically set animations by name, but here, you set animations by number. It is possible to write a utility function to set animations by name though. You will see how later.gaitsequence is player-specific, and it controls the leg animation.frame is the current animation frame. In brushes and sprites, it controls which texture frame is to be displayed. In models, it controls the percentage of the animation progress, so for example, if it's 127, it'll be roughly 50% complete.animtime is the server time when frame was set. This way, the client will have a reference as to when the animation started playing, and as such, will be able to interpolate it correctly.framerate is quite self-explanatory, except it doesn't exactly control the animation framerate directly. Instead, it is a multiplier, so a value of 1.0 will mean normal playback speed.controller is an array of 4 bone controller channels. If you have a controller that goes between -180 and +180 degrees, then setting its value to 127 will effectively set its angle to 0. Setting its value to 0 will mean an angle of -180°.blending only works if sequence or gaitsequence are special blend sequences, and it basically blends between their subsequences.We also have these:

int skin;

int body; // sub-model selection for studiomodelsskin simply selects the current texture. Let's say we have the following texture groups in some model:

$texturegroup arms

{

{ "newarm.bmp" "handback.bmp" "helmet.bmp" }

{ "newarm(dark).bmp" "handback(dark).bmp" "helmet(dark).bmp" }

}skin would basically control which row here is selected.The way

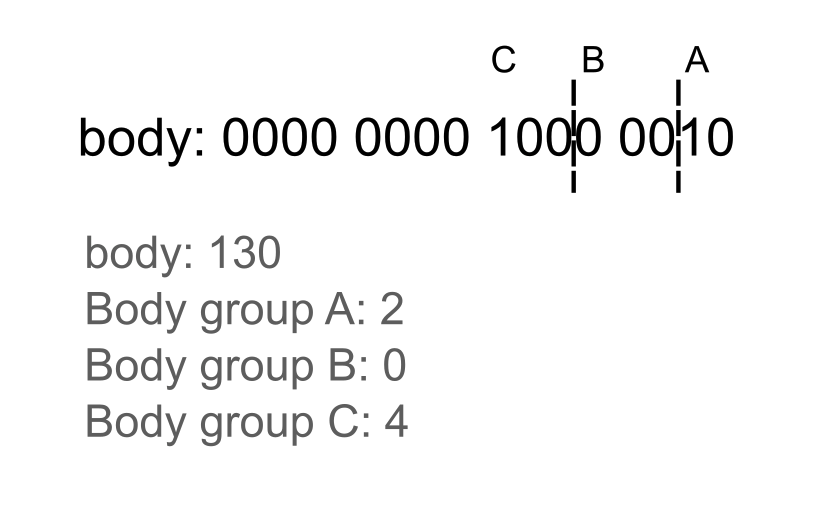

body works is by simply packing multiple "variables" into itself. For example, let's say we have 3 skin groups, where group A would have 4 variations, group B would have 9 variations and group C would have 12 variations. Group A would need only 2 bits, while B and C would need 3 bits:You do not have to perform the packing/unpacking manually, though. More about that in the high-level section.

Studio model headers

We can also obtain information about the model itself and its animations, by obtaining the studio model header:// Get a model pointer for this entity

void* pmodel = GET_MODEL_PTR( pEntity->edict() );

// Interpret that as a studio model header

studiohdr_t* pStudioHeader = (studiohdr_t*)pmodel;int groupNumber = 2;

mstudiobodyparts_t* pBodyGroup = (mstudiobodyparts_t*)((byte*)pStudioHeader + pStudioHeader->bodypartindex) + groupNumber;

ALERT( at_console, "Bodygroup %i has %i bodyparts\n", groupNumber, pBodyGroup->nummodels );data_t* pData = (data_t*)((byte*)pStudioHeader + pStudioHeader->dataindex);High-level animation system

Valve programmers already wrote such a utility ages ago,LookupSequence. You may find it in animation.cpp, and this is essentially how it works:

mstudioseqdesc_t *pseqdesc = (mstudioseqdesc_t *)((byte *)pstudiohdr + pstudiohdr->seqindex);

for (int i = 0; i < pstudiohdr->numseq; i++)

{

if (stricmp( pseqdesc[i].label, label ) == 0)

return i;

}

return -1;CBaseAnimating

It is essentially a base class inheriting from CBaseDelay, with a lot of animation utilities:

float StudioFrameAdvance( float flInterval )- Advances the animation, essentially

pev->frame += interval. You would typically call this in think functions.

- Advances the animation, essentially

int GetSequenceFlags()- Gets sequence-specific flags (e.g. "looping").

int LookupActivity( int activity )- Obtains a sequence linked to a certain activity.

int LookupActivityHeaviest( int activity )- Same as above, except it obtains the highest-priority sequence for said activity, if any.

int LookupSequence( const char* label )- Gets a sequence by name.

void ResetSequenceInfo()- Resets all animation.

void DispatchAnimEvents( float flFutureInterval = 0.1 )- Catches all animation events in the time frame of

flFutureInterval, and callsHandleAnimEventfor each caught event.

- Catches all animation events in the time frame of

virtual void HandleAnimEvent( MonsterEvent_t* pEvent )- This method is to be overridden by subclasses.

float SetBoneController( int iController, float flValue )- Sets a bone controller's value by ID, in the controller's own coordinates, returns a clipped or otherwise adjusted

flValue.

- Sets a bone controller's value by ID, in the controller's own coordinates, returns a clipped or otherwise adjusted

void InitBoneControllers()- Sets all controllers to 0.

float SetBlending( int iBlender, float flValue )- Sets the blending between two sub-sequences, for example, players when they look up and down, can bend their torso accordingly.

void GetBonePosition( int iBone, Vector& origin, Vector& angles )- Gets the current world origin and angles of a bone.

void GetAutomovement( Vector& origin, Vector& angles, float flInterval = 0.1 )- Not implemented.

int FindTransition( int iEndingSequence, int iGoalSequence, int* piDir )- Finds a transitioning sequence to reach the goal sequence, only used by monster_tentacle.

void GetAttachment( int iAttachment, Vector& origin, Vector& angles )- Gets the current world origin and angles of an attachment.

void SetBodygroup( int iGroup, int iValue )- Sets the bodypart (

iValue) of a chosen bodygroup (iGroup).

- Sets the bodypart (

int GetBodygroup( int iGroup )- Gets the current bodypart ID of the given bodygroup.

int ExtractBbox( int sequence, float* mins, float* maxs )- Gets the largest bounding box the entity will have during this sequence.

void SetSequenceBox()- Calls

ExtractBboxand sets the bounding box of the entity to that.

- Calls

animation.cpp.Either way, if you write an entity that is intended to use animated models, you will definitely want it to inherit from

CBaseAnimating. An example animating entity will be shown on the next page.

- Categories

- Tutorials

- Programming

- Goldsource Tutorials

- Article Credits

-

Admer456

–

Original author

Admer456

–

Original author

Comments

You must log in to post a comment. You can login or register a new account.